Lab 5: Completely randomized ANOVA

FANR 6750: Experimental Methods in Forestry and Natural Resources Research

Fall 2023

lab05_anova.RmdToday’s topics

- One way ANOVA as a linear model

Using lm()

Using aov()

Performing an ANOVA by hand

Plotting group means and CI’s

Multiple comparisons

One-way ANOVA as a linear model

Questions

Do the means differ and by how much (what are the effect sizes?)

Which means differ from each other?

Null hypothesis

- \(H_0 : \mu_1 = \mu_2 = \mu_3 = . . . = \mu_a\)

OR

- \(H_0 : \alpha_1 = \alpha_2 = \alpha_3 = . . . = \alpha_a = 0\)

Formulation as a linear model

Just as we have seen in the last lab, many of the analyses that we will perform this semester can be thought of in multiple ways. A one-way ANOVA can be formulated as a linear model:

\[ y_{ij} = \mu + \alpha_i + \epsilon_{ij} \ \ \ \ \ \text{where} \ \ \ \ \ \epsilon_{ij} \sim \text{N}(0,\sigma) \]

Can you define each term in the context of the data? Why did we need to add additional sub-scripting to the observation and residual terms?

Exercise 1

The dataset below comes from a pilot study implemented by the US Forest Service. The service is interested in purchasing a number of chainsaws to use for various projects and fieldwork. Due to safety concerns, the service is hoping to select the chainsaw brand (A, B, C, or D) which has the lowest average kickback angle. Kickback, which refers to the sudden upward motion of the saw’s guide bar when the saw strikes a hard object, is one of the leading causes of injuries during chainsaw work. While the service would like to prioritize safety, their secondary objective is to find a reasonably priced brand. The brands range in price (D,B,A,C) with Brand D being the most expensize.

- Load the sawdata dataset and examine the data

library(FANR6750)

data("sawdata")

head(sawdata)

#> Chainsaw A B C D

#> 1 1 42 38 52 29

#> 2 2 36 50 54 35

#> 3 3 33 44 48 31

#> 4 4 39 37 49 36

#> 5 5 43 47 54 32You may notice that something seems different about this dataset than the previous ones we have looked at over the last few weeks. This is because the data are in what is called ‘wide format’. This means that each level of the grouping variable (in this case chainsaw brand) has gotten its own column in the dataframe. While wide format is often convenient for reports because it allows you to more easily see trends in a table, long format is more useful for succinctly storing data and for use in most analyses.

- Convert the dataframe from wide format to long format1

library(tidyr)

sawdata <- sawdata %>% pivot_longer(cols= c('A', 'B', 'C', 'D'),

names_to= 'Brand',

values_to= 'angle')

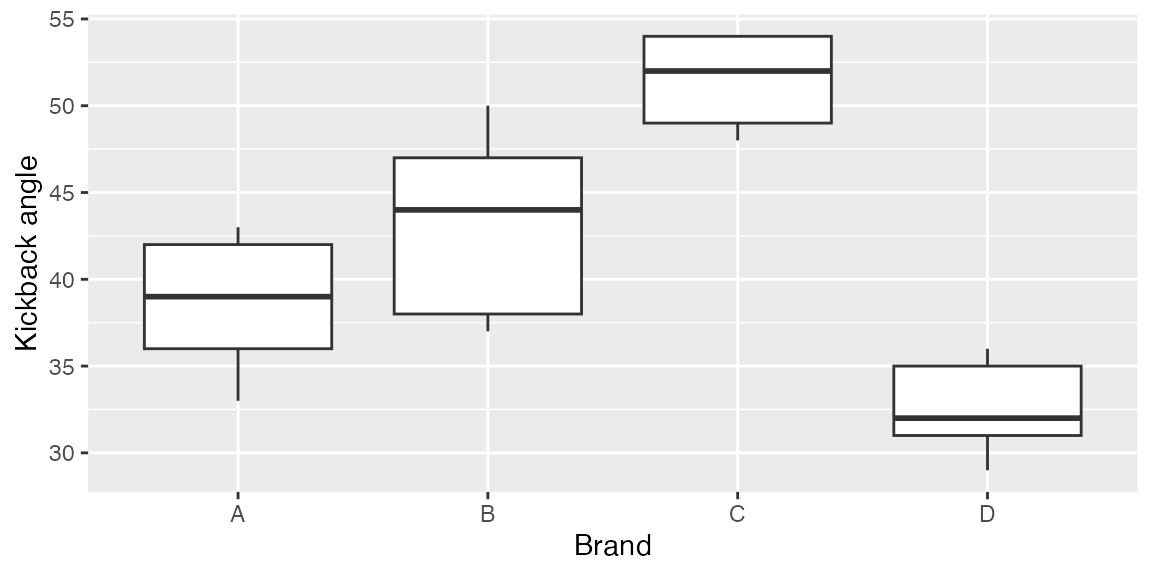

sawdata$Brand <- as.factor(sawdata$Brand)- Visualize the data

ggplot(sawdata, aes(x = Brand, y = angle)) +

geom_boxplot() +

scale_y_continuous("Kickback angle")

Using lm()

- Perform the analysis using the lm() function

mod1 <- lm(angle~ Brand, data= sawdata)

summary(mod1)

#>

#> Call:

#> lm(formula = angle ~ Brand, data = sawdata)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -6.2 -2.8 0.5 2.8 6.8

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 38.600 1.804 21.395 3.38e-13 ***

#> BrandB 4.600 2.551 1.803 0.090272 .

#> BrandC 12.800 2.551 5.017 0.000127 ***

#> BrandD -6.000 2.551 -2.352 0.031836 *

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 4.034 on 16 degrees of freedom

#> Multiple R-squared: 0.7835, Adjusted R-squared: 0.7429

#> F-statistic: 19.3 on 3 and 16 DF, p-value: 1.446e-05

model.matrix(mod1)

#> (Intercept) BrandB BrandC BrandD

#> 1 1 0 0 0

#> 2 1 1 0 0

#> 3 1 0 1 0

#> 4 1 0 0 1

#> 5 1 0 0 0

#> 6 1 1 0 0

#> 7 1 0 1 0

#> 8 1 0 0 1

#> 9 1 0 0 0

#> 10 1 1 0 0

#> 11 1 0 1 0

#> 12 1 0 0 1

#> 13 1 0 0 0

#> 14 1 1 0 0

#> 15 1 0 1 0

#> 16 1 0 0 1

#> 17 1 0 0 0

#> 18 1 1 0 0

#> 19 1 0 1 0

#> 20 1 0 0 1

#> attr(,"assign")

#> [1] 0 1 1 1

#> attr(,"contrasts")

#> attr(,"contrasts")$Brand

#> [1] "contr.treatment"Can you interpret each estimate? Why are only the last three brands shown (what happened to Brand A)? What does each p-value represent and what hypothesis are they testing?

Using aov()

- Perform the analysis using the aov() function

aov1 <- aov(angle ~ Brand, data = sawdata)- View the ANOVA table

summary(aov1)

#> Df Sum Sq Mean Sq F value Pr(>F)

#> Brand 3 942.6 314.18 19.3 1.45e-05 ***

#> Residuals 16 260.4 16.27

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1What can we conclude from this ANOVA table? Does there appear to be more or less information (or just different) provided here than when we used the lm() function?

The default output from summary is fine for viewing in

the console but what if you want to include the ANOVA table in a report

or paper? You could manually create a table and copy/paste the values,

but that’s a pain. Luckily, there is a handy package called

broom that turns the output from many model functions into

cleaned-up data frames2:

broom::tidy(aov1)

#> # A tibble: 2 × 6

#> term df sumsq meansq statistic p.value

#> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 Brand 3 943. 314. 19.3 0.0000145

#> 2 Residuals 16 260. 16.3 NA NAIn R Markdown, we can even include that data frame as a nicely formatted table directly in the knitted document:

aov_df <- broom::tidy(aov1)

options(knitr.kable.NA = '') # don't print NA's in table

knitr::kable(aov_df,

col.names = c("Source", "df", "SS", "MS", "F", "p"),

align = 'c', format = "html") | Source | df | SS | MS | F | p |

|---|---|---|---|---|---|

| Brand | 3 | 942.55 | 314.1833 | 19.30466 | 1.45e-05 |

| Residuals | 16 | 260.40 | 16.2750 |

Getting estimates of group means ( \(\mu\)’s) and SE’s

model.tables(aov1, type = "means", se = TRUE)

#> Tables of means

#> Grand mean

#>

#> 41.45

#>

#> Brand

#> Brand

#> A B C D

#> 38.6 43.2 51.4 32.6

#>

#> Standard errors for differences of means

#> Brand

#> 2.551

#> replic. 5Getting estimates of effect sizes ( \(\alpha\)’s) and SE’s

model.tables(aov1, type = "effects", se = TRUE)

#> Tables of effects

#>

#> Brand

#> Brand

#> A B C D

#> -2.85 1.75 9.95 -8.85

#>

#> Standard errors of effects

#> Brand

#> 1.804

#> replic. 5Constructing an ANOVA table by hand

Just as we did in the last lab, we will see below how R

is actually doing the ANOVA and we’ll get practice constructing an ANOVA

table by hand.

Grand mean

(ybar. <- mean(sawdata$angle))

#> [1] 41.45Group means

As with most things in R, there are several ways to

calculate the group means. First, find the group means, the hard

way:

A <- sawdata$angle[sawdata$Brand == "A"]

B <- sawdata$angle[sawdata$Brand == "B"]

C <- sawdata$angle[sawdata$Brand == "C"]

D <- sawdata$angle[sawdata$Brand == "D"]

(ybar.i <- c(mean(A), mean(B), mean(C), mean(D)))

#> [1] 38.6 43.2 51.4 32.6Better yet, use tapply to find the group means (the base

R way):

(ybar.i <- tapply(sawdata$angle, INDEX = sawdata$Brand, FUN = mean))

#> A B C D

#> 38.6 43.2 51.4 32.6Finally, find the group means, the tidyverse way:

library(dplyr)

(ybar.i <- sawdata %>%

group_by(Brand) %>%

summarise(mu = mean(angle)))

#> # A tibble: 4 × 2

#> Brand mu

#> <fct> <dbl>

#> 1 A 38.6

#> 2 B 43.2

#> 3 C 51.4

#> 4 D 32.6Although each of these methods does what we want - return the mean kickback angle for each group - note that they do not return the same type of object.

Use the class() function to see what type of object each

one returns.

When might the output from each method be useful?

Sum of squares

Sums of squares among

# Number of saw brands

a <- length(unique(sawdata$Brand))

# Number of measurements of each brand (note, this assumes balanced design)

n <- nrow(sawdata)/a

SSa <- n * sum((ybar.i$mu - ybar.)^2)

SSa

#> [1] 942.55Sums of squares within3

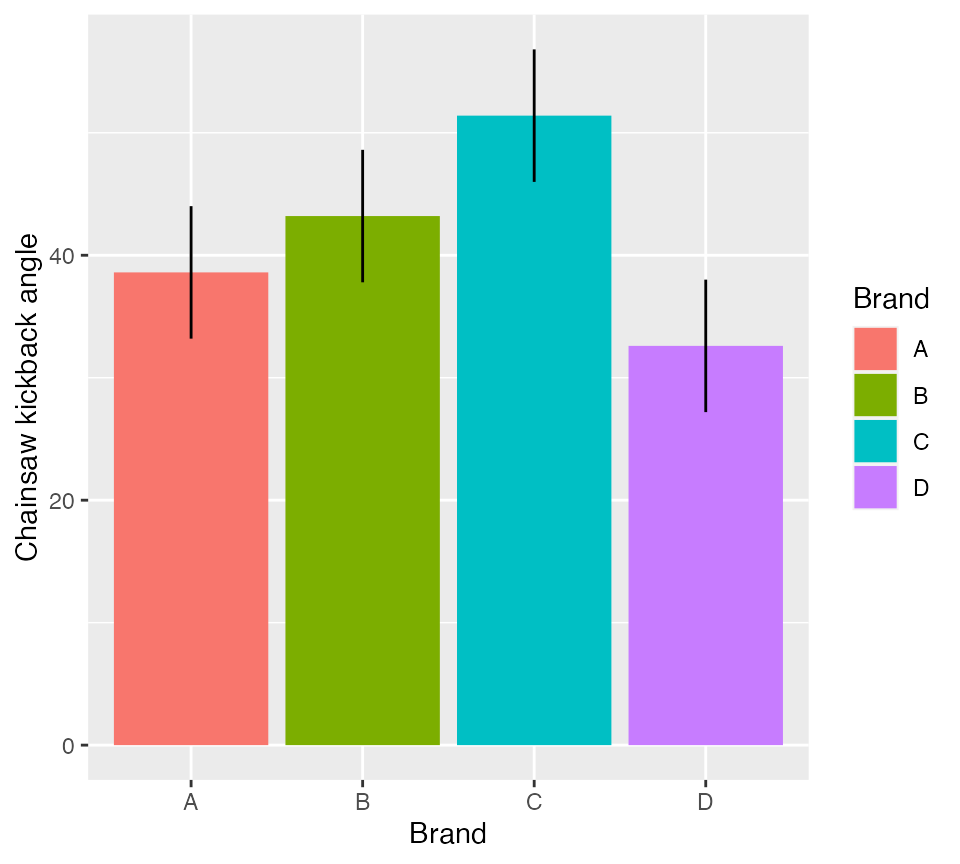

Plotting group means and confidence intervals

Although we have performed the ANOVA in multiple ways and can conclude that there is at least one difference in means between the chainsaw brands, we still have a few tasks to perform. The first is to plot the data in a meaningful way. With this type of data there are at least two ways we could display the results. An example of one is below; we’ll practice the second one in the homework assignment.

In an ANOVA context, confidence intervals can be constructed using the equation:

\[ CI = Point\: estimate \pm t_{\alpha/2,a(n-1)}\times SE \]

# Extract the SE

mean.SE <- 2.551 # hard coded from model.tables()

# OR

table <- model.tables(aov1, type = "means", se = TRUE)

mean.SE <- as.numeric(table$se) # more explicit

mean.SE

#> [1] 2.55147

# Compute confidence intervals

tc <- qt(0.975, a*(n-1))

lowerCI <- ybar.i$mu - tc*mean.SE

upperCI <- ybar.i$mu + tc*mean.SE

ggplot(ybar.i, aes(x = Brand, y = mu, fill = Brand)) +

geom_col() +

geom_errorbar(aes(ymin = lowerCI, ymax = upperCI), width = 0) +

scale_y_continuous("Chainsaw kickback angle")

Figure 1: Mean kickback angle from four chainsaw brands with 95% CI

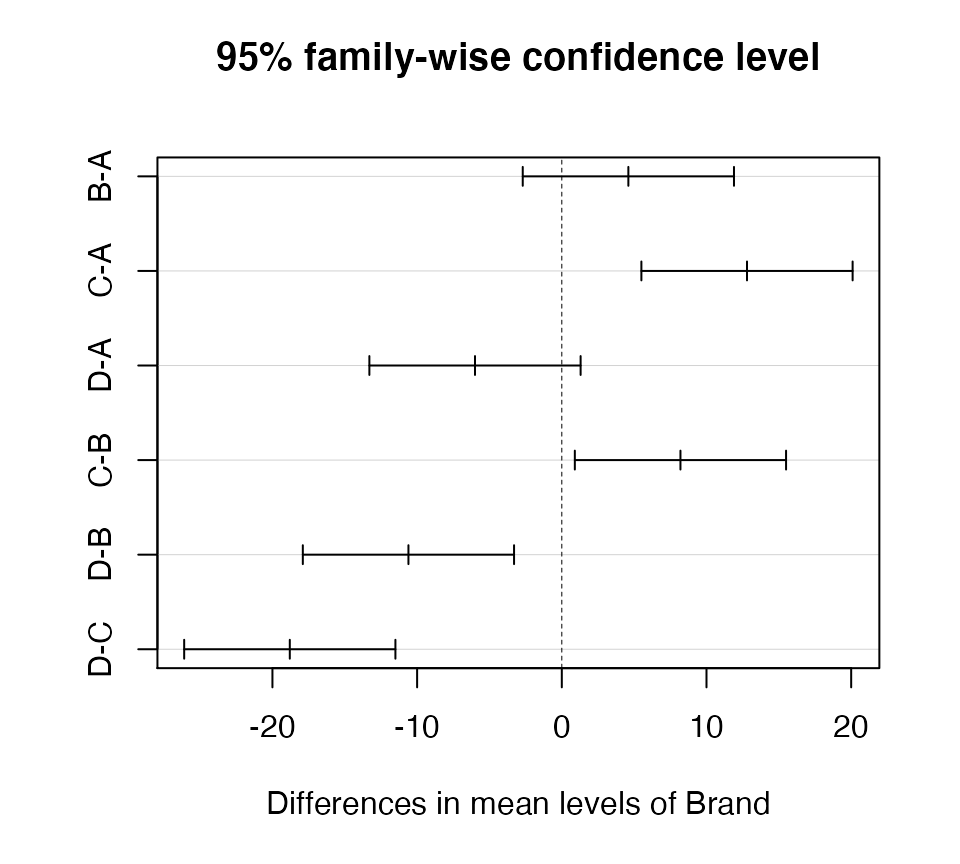

Multiple comparisons

TukeyHSD(aov1)

#> Tukey multiple comparisons of means

#> 95% family-wise confidence level

#>

#> Fit: aov(formula = angle ~ Brand, data = sawdata)

#>

#> $Brand

#> diff lwr upr p adj

#> B-A 4.6 -2.6998067 11.899807 0.3078582

#> C-A 12.8 5.5001933 20.099807 0.0006595

#> D-A -6.0 -13.2998067 1.299807 0.1276316

#> C-B 8.2 0.9001933 15.499807 0.0250908

#> D-B -10.6 -17.8998067 -3.300193 0.0037406

#> D-C -18.8 -26.0998067 -11.500193 0.0000087 Which brands were significantly different from one another? How

would you answer the original question at the start of this

exercise?

Which brands were significantly different from one another? How

would you answer the original question at the start of this

exercise?

Assignment

A biologist wants to compare the growth of four different tree species she is considering for use in reforestation efforts. All 32 seedlings of the four species are planted at the same time in a large plot. Heights in meters are recorded after several years.

Create an R Markdown file to do the following:

- Create an

Rchunk to load the data using:

Create a header called “Hypotheses” and under this header, indicate, in plain English, what the null and alternative hypotheses are. Also use R Markdown’s equation features to write these hypotheses using mathematical notation.

Create a header called “ANOVA by hand”. Under this header, perform an ANOVA analysis (degrees of freedom, sums-of-squares, mean-squares, and F-value) without using

aov(). Compute either the critical value of F, or the p-value. Be sure to annotate your code.Create a header called “ANOVA in R”. Under this header, perform an ANOVA analysis on the data using the

lm()function and then again using theaov()function.-

Under a subheader called “ANOVA results”:

indicate whether or not the null hypothesis can be rejected at the \(\alpha = 0.05\) level

include a well-formatted ANOVA table using the

broom::tidy()functioncreate a barplot showing the effect sizes and 95% confidence intervals around them

Create a header called “Which means are different?”. Use Tukey’s HSD test to determine which pairs of means differ at the \(\alpha = 0.05\) level. Include a few sentences indicating which pairs are different. If there is an inconsistency between the initial ANOVA results and the post-hoc comparisons, explain why that might be the case.

A few things to remember when creating the assignment:

Be sure the output type is set to:

output: html_documentTitle the document:

title: "Homework 1"Be sure to include your first and last name in the

authorsectionBe sure to set

echo = TRUEin allRchunks so we can see both your code and the outputPlease upload both the

htmland.Rmdfiles when you submit your assignmentSee the R Markdown reference sheet for help with creating

Rchunks, equations, tables, etc.

In this code chunk, we introduce the idea of pipes in R. A pipe (shown with %>%) is a way of stacking multiple functions on top of each other in an easy to read way. Many packages (including tidyr) use pipes instead of having functions nested inside of each other. While pipes are generally considered to have multiple advantages related to their readability as well as modular structure, there are ways to perform any of these steps without their use.↩︎

Notice the use of the double colon in the code chunk below. This is used in

Rto explicitly link a function to the package from where it came. Because there is an ever expanding list of available packages and many of them contain functions that have the same names but perform different tasks, it is necessary to tellRwhich particular function you are trying to use. If we did not specify the package using the double colon operator,Rwould use the function from whatever was the most recently loaded package in your library that contained that function name.↩︎Notice the use of the times= argument. How do we decide whether to use times= or each=? In this case, we need to look at the order of the data in the dataframe. Because of how the data is ordered, we are interested in taking the entire mu vector and repeating it 5 times. If the data were ordered such that all the Brand A observations were first etc. then we would have taken each element in the mu vector and repeated it each= 5.↩︎