Poisson GLM for modeling count data

WILD6900

2021-01-05

poisson_glm.RmdIn this activity, we will analyze a small data set containing counts of both population size and reproductive success using Poisson and Binomial GLMs.

Objectives

Analyze count data using Poisson and Binomial GLMs

Gain experience diagnosing JAGS output to check convergence

-

Rfunctions used in this exercise:

Data

The data for this activity comes from a long-term project that monitored a population of peregrine falcons nesting in the French Jura from 1964 to 2003 1.

Load and inspect the data:

| Year | Pairs | R.Pairs | Eyasses |

|---|---|---|---|

| 1964 | 34 | 19 | 27 |

| 1965 | 45 | 18 | 30 |

| 1966 | 39 | 22 | 36 |

| 1967 | 36 | 19 | 37 |

| 1968 | 20 | 8 | 19 |

| 1969 | 18 | 7 | 10 |

To see what each column represents, type:

?falconsAnalysis 1: Change in population size

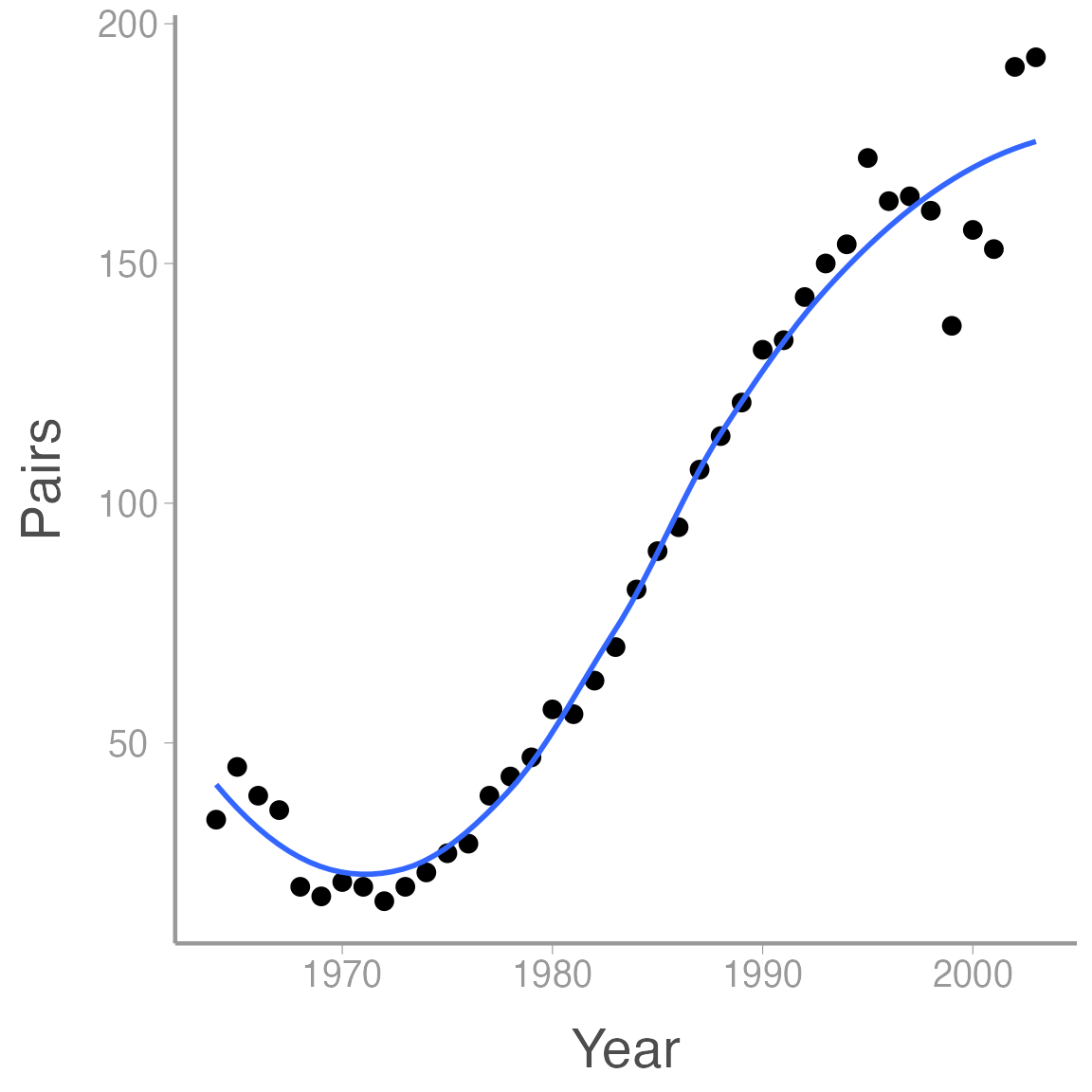

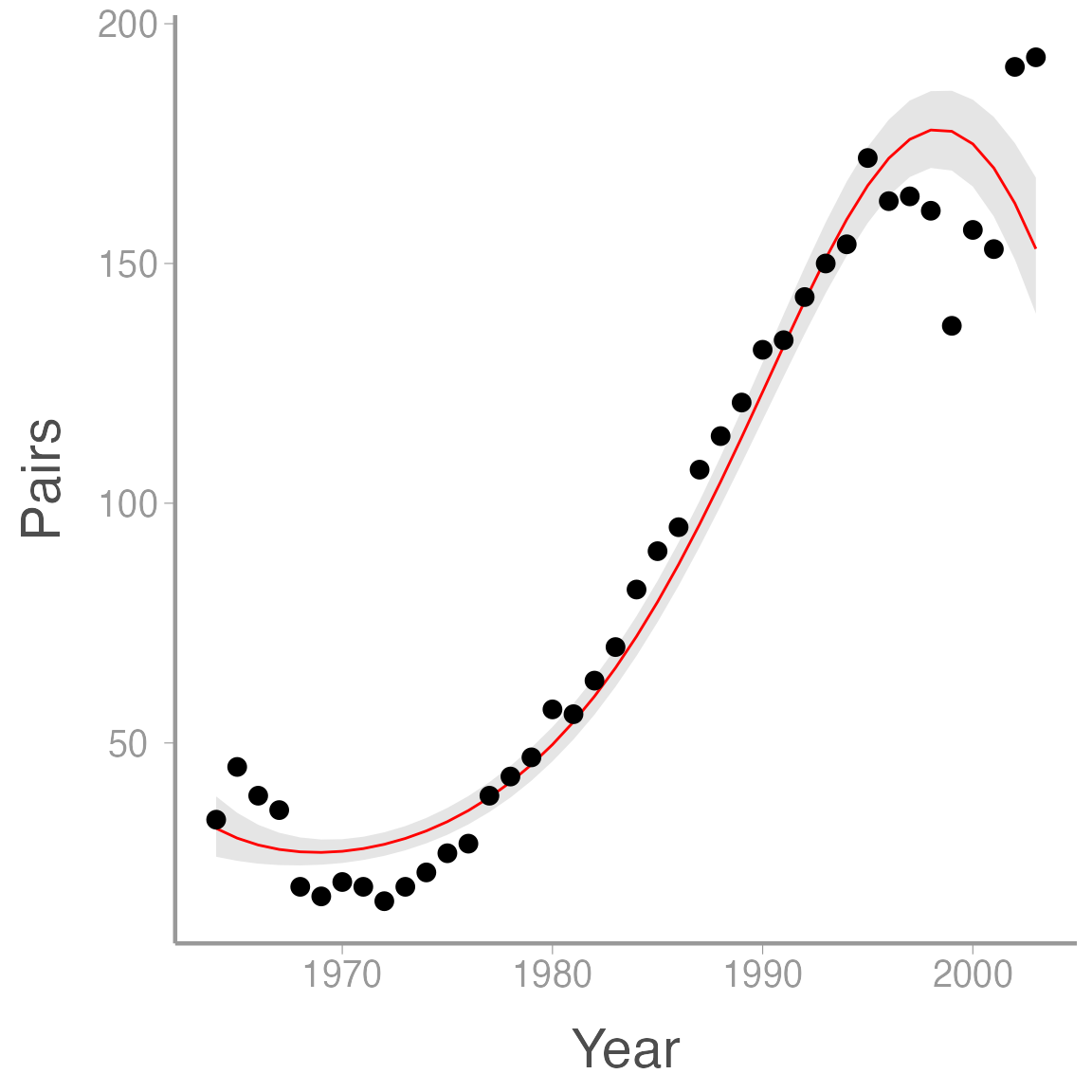

The first model we will fit examines change in the number of adult falcon pairs over time. Plotting the data shows that this change has not been linear:

ggplot(falcons, aes(x = Year, y = Pairs)) + geom_point() + stat_smooth(se = FALSE)

After a short decline at the beginning of the study period, the population then increased dramatically before perhaps reaching its carrying capacity.

Modeling non-linear effects using linear models

How can we model the non-linear change in abundance if, by definition, linear models model linear effects? Using polynomials!

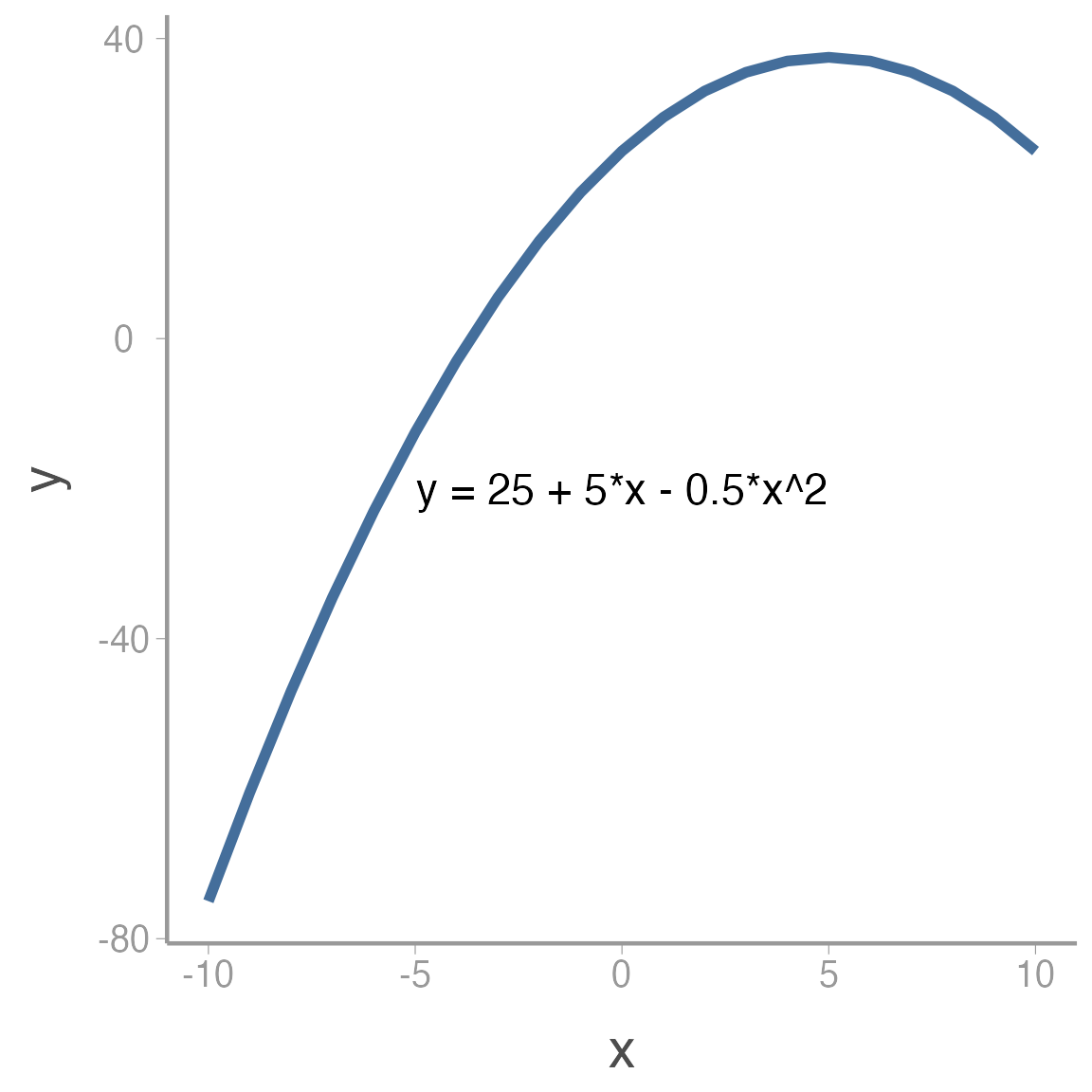

Remember the equation for a curved line with a single peak (or bottom):

\[\Large y = a + b \times x + c \times x^2\]

Where \(a\) is the maximum (or minimum) value of \(y\), \(b\) is the value of \(x\) where this maximum (or minimum) occurs and \(c\) determines whether the peak is a maximum (\(c<0\)) or a minimum (\(c>0\)).

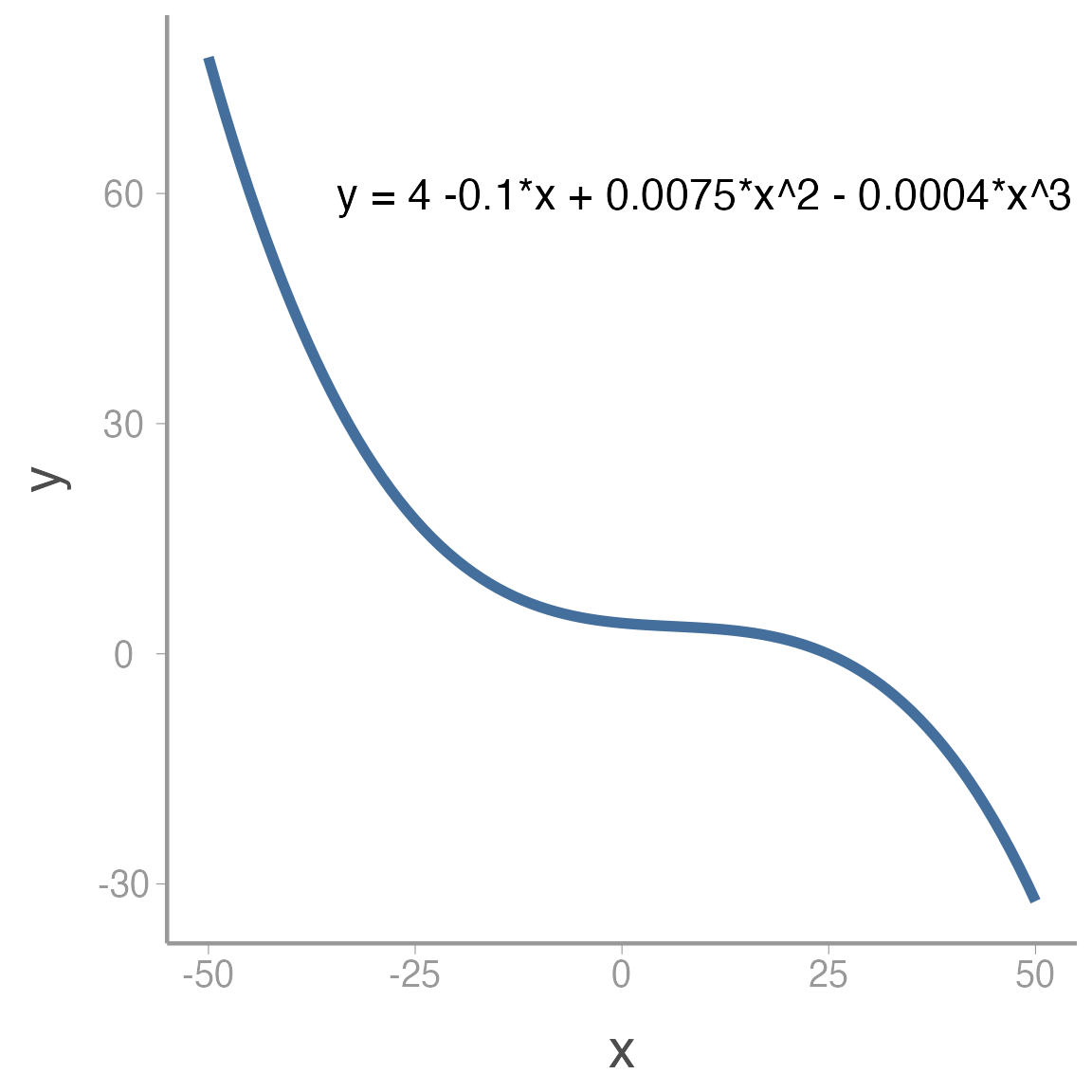

We can add more complex shape by adding additional polynomial terms. For example, including a cubic term creates an s-shaped curve:

\[\Large y = a + b \times x + c \times x^2 + d \times x^3\]

Including polynomial terms in the linear predictor on the model gives us enormous flexibility to model non-linear relationships using GLMs.

Modeling change in falcon counts

To build a model for the falcon data, we need to define the components required in all GLMs (the distribution, link function, and linear predictor). Because these are counts, a natural choice for the distribution is:

\[C_t \sim Poisson(\lambda_t)\] where \(C_t\) is the observed count in year \(t\) and \(\lambda_t\) is the expected count.

As we learned in lecture, the conventional link function for count data is the log-link:

\[log(\lambda_t) = log(E(\lambda_t))\]

Finally, we need to write the linear predictor. Based on the preliminary visualization of the data, a cubic polynomial might be appropriate to capture the non-linear change over time:

\[log(\lambda_t) = \alpha + \beta_1 \times year_t + \beta_2 \times year^2_t + \beta_3 \times year^3_t\]

Accessing and viewing the JAGS model

A JAGS model file that corresponds to the above model is already included in the WILD6900 package. You can access that file and view the model using the following code:

mod.file <- system.file("jags/GLM_Poisson.jags", package = "WILD6900")

file.show(mod.file)model {

# Priors

alpha ~ dnorm(0, 0.33)

beta1 ~ dnorm(0, 0.33)

beta2 ~ dnorm(0, 0.33)

beta3 ~ dnorm(0, 0.33)

# Likelihooda

for (i in 1:n){

C[i] ~ dpois(lambda[i])

log(lambda[i]) <- alpha + beta1 * year[i] + beta2 * pow(year[i],2) + beta3 * pow(year[i],3)

} #i

}From this file, you can see that we will use relatively non-informative normal priors on each of the regression coefficients.

You can also see that the likelihood statement is very similar to the linear regression model from the last lecture, with a few minor differences. First, because we assume the observed falcon counts come from a Poisson distribution, we use dpois(lambda[i]) rather than dnorm(mu[i], tau). Also, we have to apply the log-link function to the predicted counts (log(lambda[i])=...). Notice that JAGS allows you to model the transformed predicted counts on the left hand side of the linear predictor equation

Questions

Plot a histogram of random samples from the normal prior used in the model (remember to convert the precision of

0.33to standard deviation). As you can see, this is not as vague as the normal priors we have used in the past. What is the advantage of using less-vague normal priors?In the linear regression model we fit in the last lecture, we also had a prior for \(\tau\), the (inverse) of the process variance. Why do we not include that parameter in this model?

Creating the

lambda[i]object is not strictly necessary since it is a deterministic function of year. If you wanted to have fewer lines of code, you could include the linear predictor directly inside of thedpois()function instead oflambda[i], though you would need to appropriately transform the linear predictor. What transformation would you use to put the linear predictor on the count scale?

Fitting the model

Before fitting the model, we need to prepare the input for JAGS. This includes:

storing the data as a named list

creating a function to randomly generate the initial values for each parameter

creating a vector with the parameters we want JAGS to monitor

set the MCMC settings

We’ve mentioned several times, it’s often a bad idea to include covariate values that are too far from 0. For this reason, we will first scale year to \(mean=0\) and \(sd=1\):

year <- (falcons$Year - mean(falcons$Year))/sd(falcons$Year)

jags_data <- list(C = falcons$Pairs, year = year, n = nrow(falcons))

jags_inits <- function(){list(alpha = rnorm(1), beta1 = rnorm(1), beta2 = rnorm(1), beta3 = rnorm(1))}

params <- c("alpha", "beta1", "beta2", "beta3", "lambda")

nC <- 3

nI <- 10000

nB <- 2500

nT <- 1Now we’re ready to run the model:

falcon_mod <- jagsUI::jags(data = jags_data, inits = jags_inits,

parameters.to.save = params,

model.file = mod.file,

n.chains = nC, n.iter = nI,

n.burnin = nB, n.thin = nT)

#>

#> Processing function input.......

#>

#> Done.

#>

#> Compiling model graph

#> Resolving undeclared variables

#> Allocating nodes

#> Graph information:

#> Observed stochastic nodes: 40

#> Unobserved stochastic nodes: 4

#> Total graph size: 349

#>

#> Initializing model

#>

#> Adaptive phase.....

#> Adaptive phase complete

#>

#>

#> Burn-in phase, 2500 iterations x 3 chains

#>

#>

#> Sampling from joint posterior, 7500 iterations x 3 chains

#>

#>

#> Calculating statistics.......

#>

#> Done.View a portion of the results (printing all of the lambda values takes up too much room):

falcon_mod$summary[1:10,]| mean | sd | 2.5% | 25% | 50% | 75% | 97.5% | Rhat | n.eff | overlap0 | f | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| alpha | 4.2317 | 0.0301 | 4.1721 | 4.2115 | 4.2319 | 4.2520 | 4.2907 | 1 | 22500 | 0 | 1.0000 |

| beta1 | 1.1153 | 0.0477 | 1.0218 | 1.0830 | 1.1152 | 1.1473 | 1.2092 | 1 | 22500 | 0 | 1.0000 |

| beta2 | 0.0059 | 0.0242 | -0.0421 | -0.0103 | 0.0060 | 0.0223 | 0.0527 | 1 | 14586 | 1 | 0.6007 |

| beta3 | -0.2325 | 0.0251 | -0.2820 | -0.2493 | -0.2324 | -0.2155 | -0.1836 | 1 | 14889 | 0 | 1.0000 |

| lambda[1] | 32.1846 | 3.2008 | 26.2708 | 29.9611 | 32.0562 | 34.2767 | 38.8524 | 1 | 5548 | 0 | 1.0000 |

| lambda[2] | 30.1530 | 2.5479 | 25.4171 | 28.3835 | 30.0654 | 31.8288 | 35.4304 | 1 | 5535 | 0 | 1.0000 |

| lambda[3] | 28.7233 | 2.0693 | 24.8243 | 27.3004 | 28.6677 | 30.0927 | 32.9410 | 1 | 5679 | 0 | 1.0000 |

| lambda[4] | 27.7935 | 1.7253 | 24.5276 | 26.6171 | 27.7522 | 28.9522 | 31.2708 | 1 | 6105 | 0 | 1.0000 |

| lambda[5] | 27.2926 | 1.4886 | 24.4605 | 26.2753 | 27.2657 | 28.2855 | 30.2921 | 1 | 7035 | 0 | 1.0000 |

| lambda[6] | 27.1727 | 1.3392 | 24.6066 | 26.2592 | 27.1462 | 28.0620 | 29.8622 | 1 | 8900 | 0 | 1.0000 |

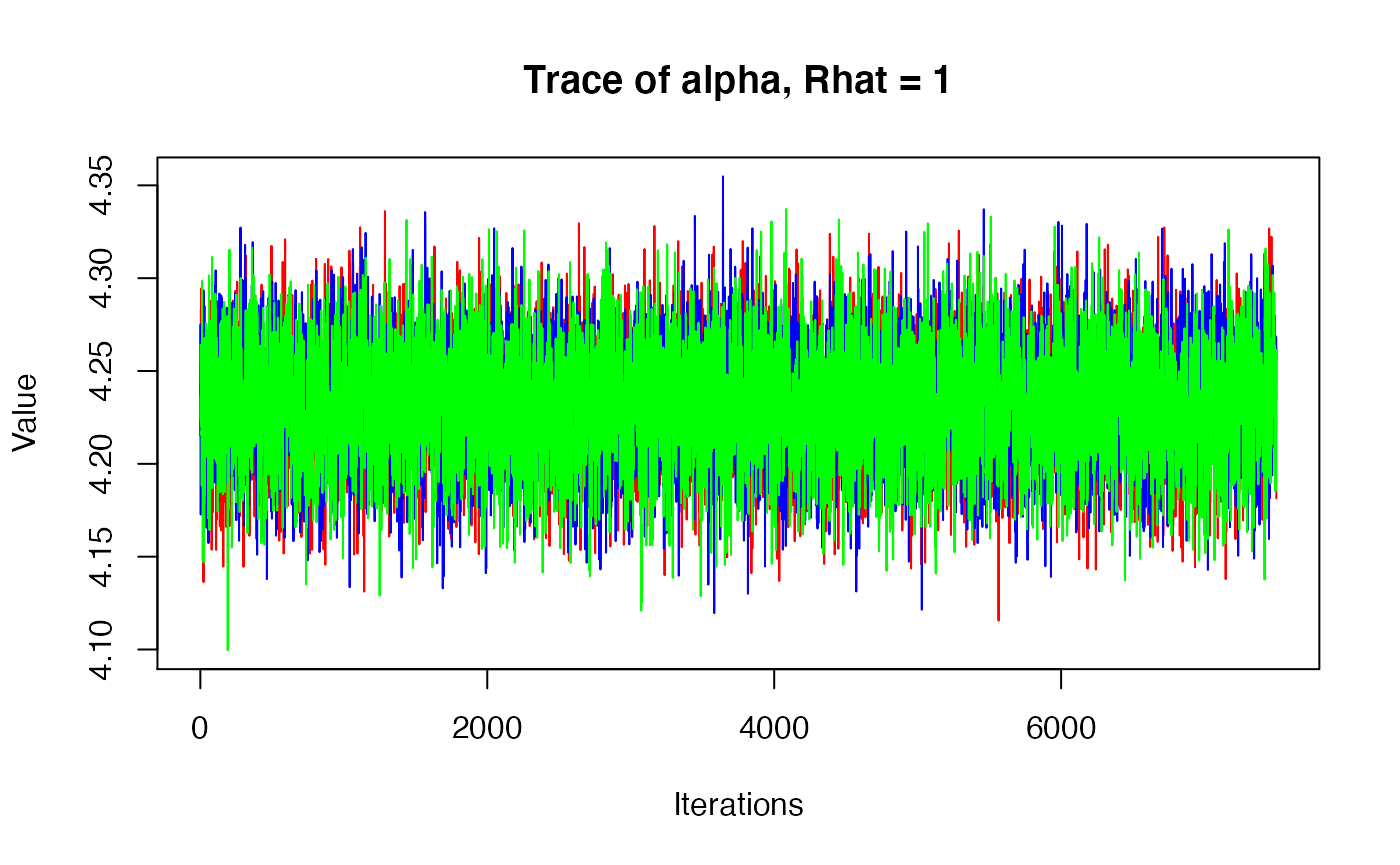

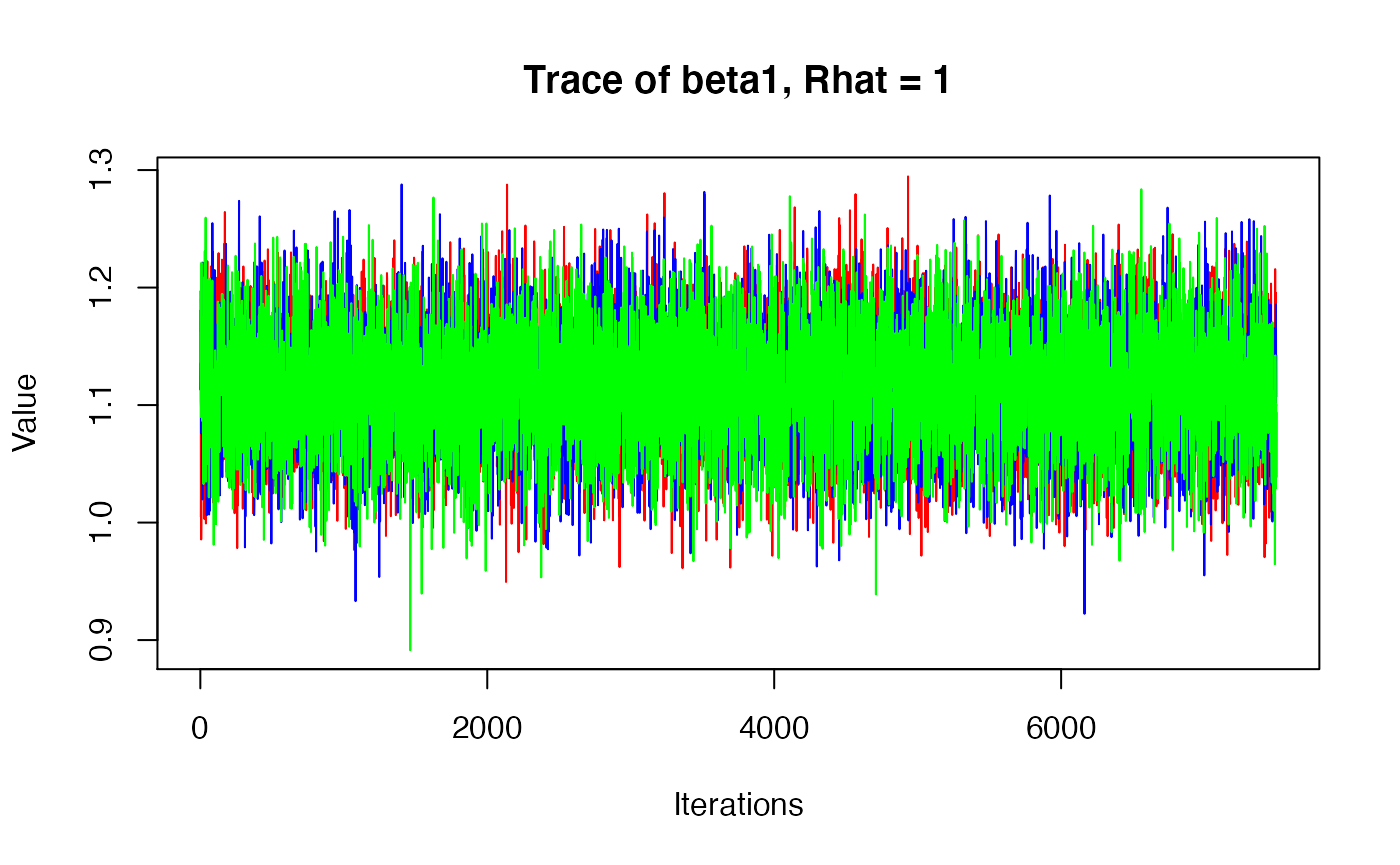

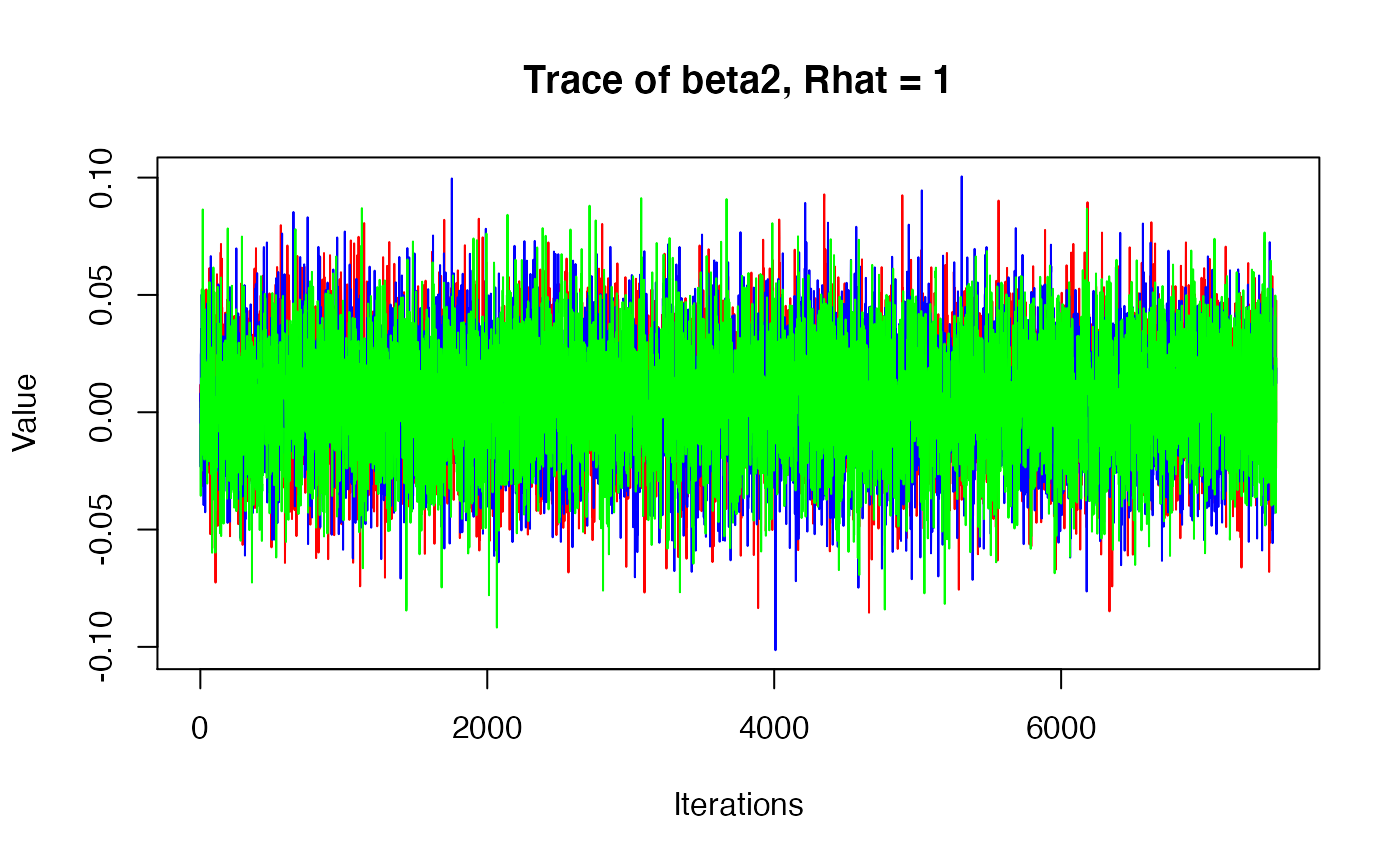

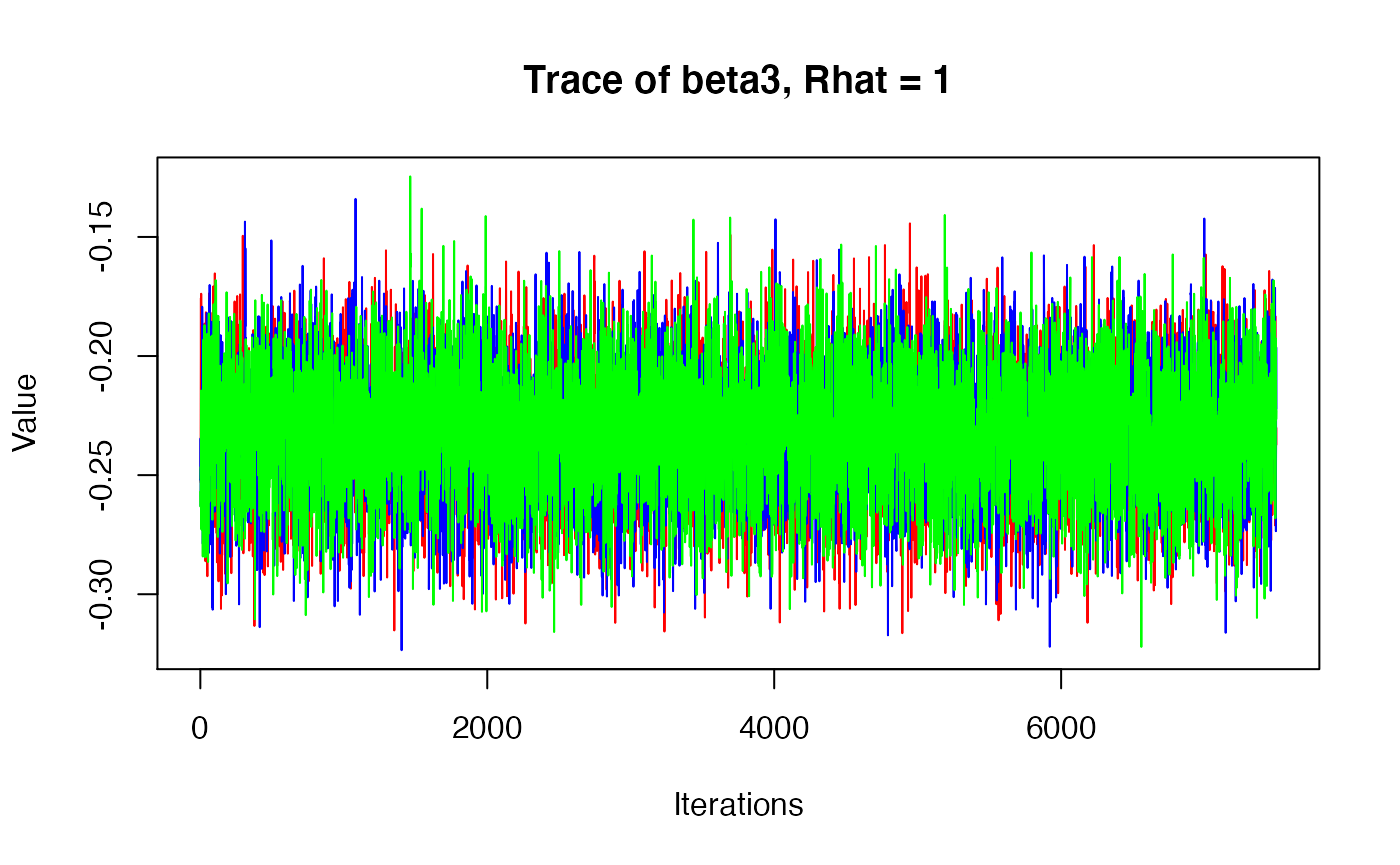

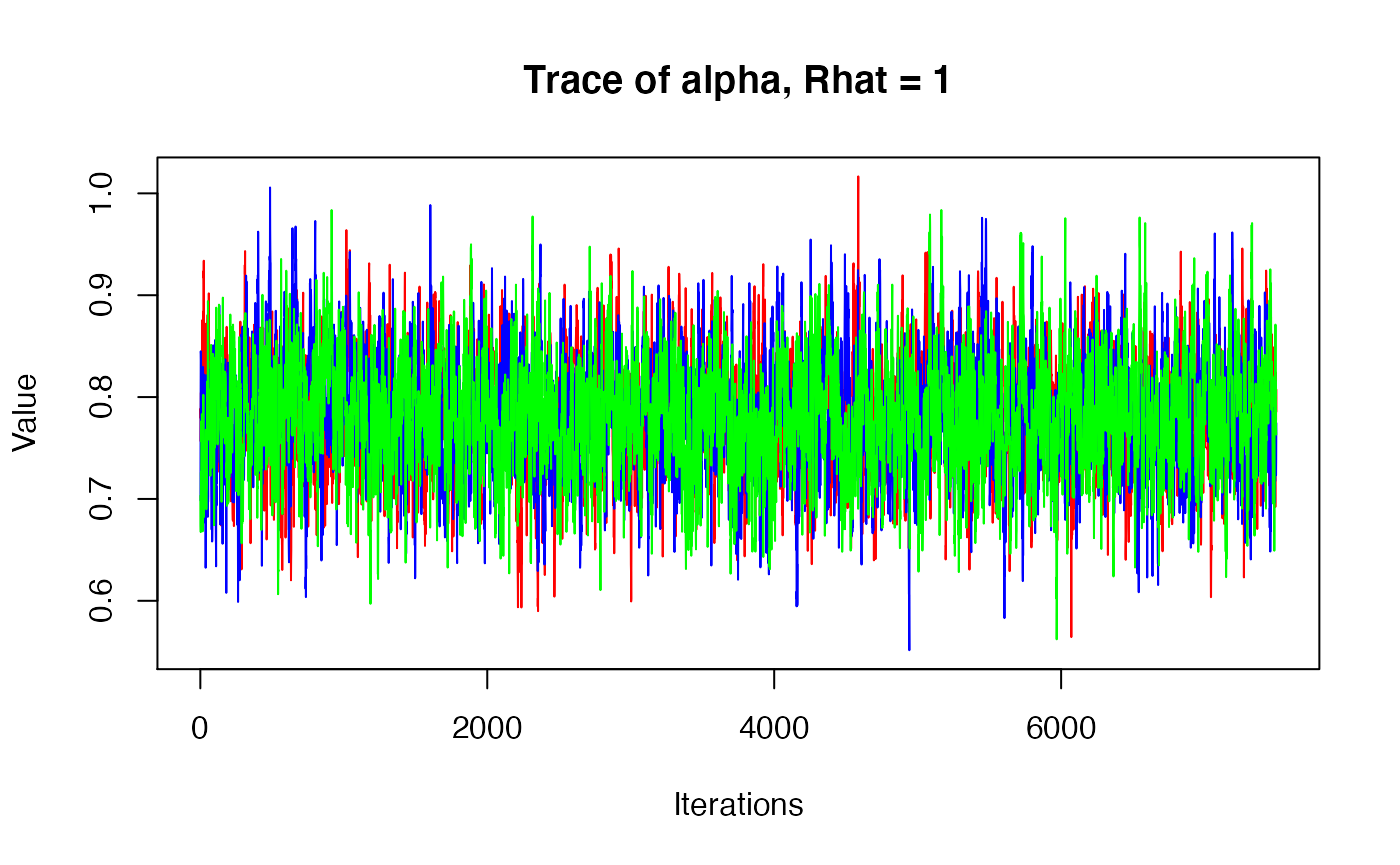

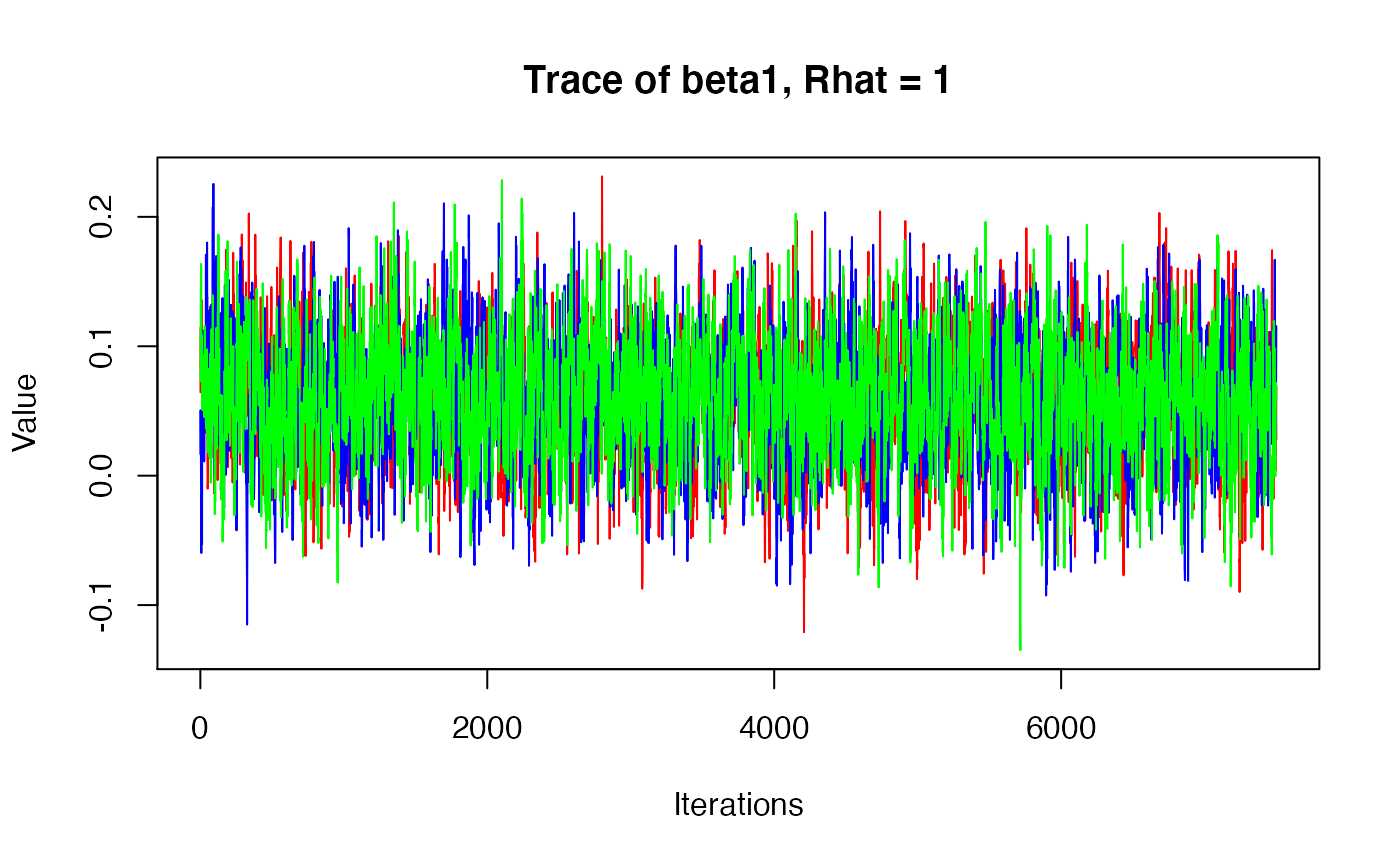

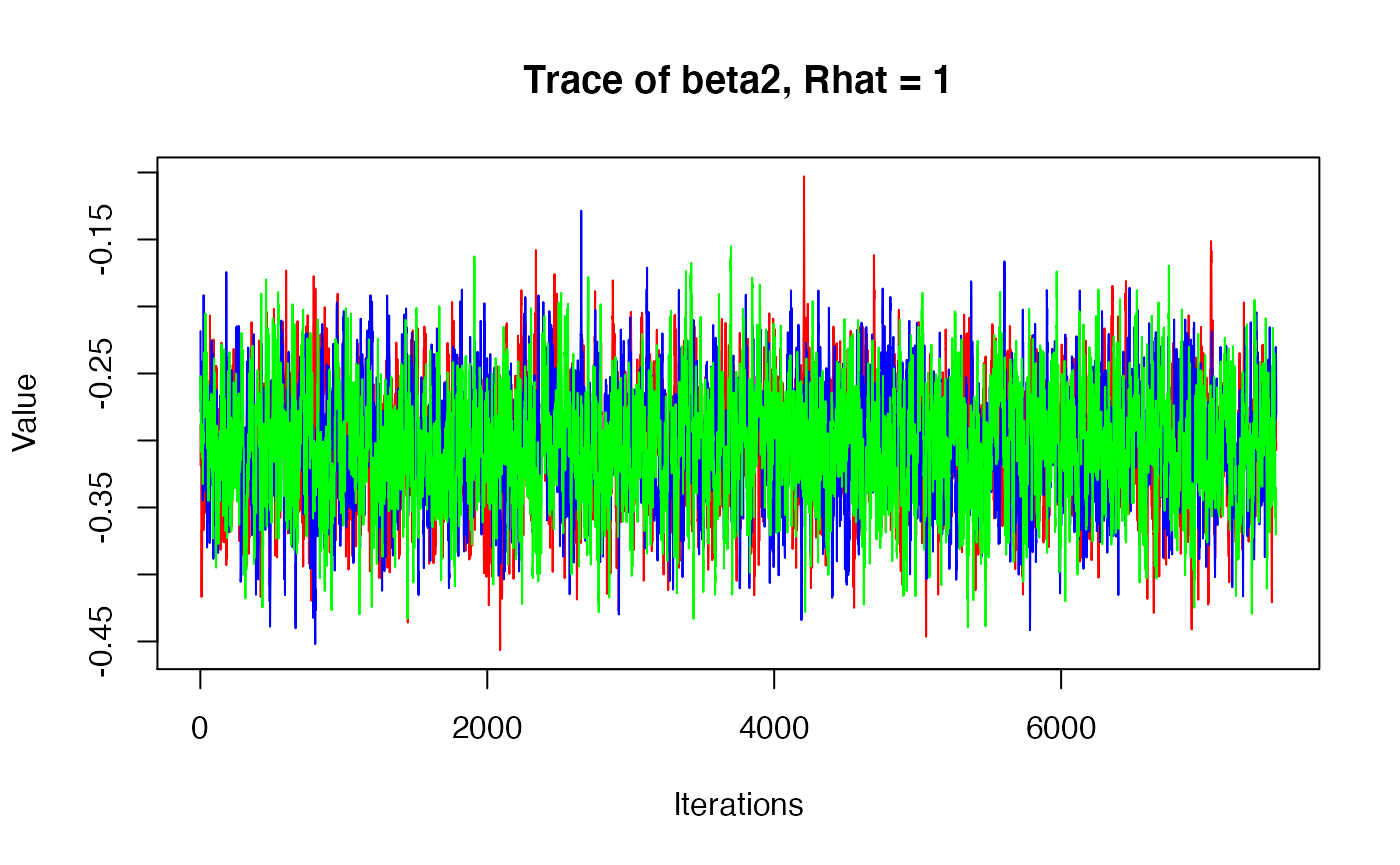

Notice that all Rhat are less than 1.1, indicating that all parameters appear to have converged. Also note f for beta2 - the posterior mean is 0.006 but there is only a 60.1% probability that it is greater than 0.

As usual, let’s check the trace plots to see how they look:

# View traceplots for alpha, beta1, beta2, and beta3 (not for lambda)

jagsUI::traceplot(falcon_mod, parameters=params[-5])

By monitoring lambda we can also plot the predicted counts along with the observed counts. First, we add the posterior means and upper/lower bounds of the 95% credible interval to the falcons data frame, then use ggplot to visualize:

falcons <- dplyr::mutate(falcons, lambda = falcon_mod$mean$lambda,

q2.5 = falcon_mod$q2.5$lambda,

q97.5 = falcon_mod$q97.5$lambda)

ggplot(falcons) +

geom_ribbon(aes(x = Year, ymin = q2.5, ymax = q97.5), fill = "grey90") +

geom_path(aes(x = Year, y = lambda), color = "red") +

geom_point(aes(x = Year, y = Pairs)) +

scale_y_continuous("Pairs")

Analysis 2: Nest success model

Next, let’s use the falcons data set to fit another type of GLM - the binomial GLM. Hopefully this exercise will show you that once you’re comfortable writing out and coding the GLM components (distribution, link function, and linear predictor), it is extremely easy to fit models with different distributional assumptions.

To estimate reproductive success (i.e., the probability that a pair successfully produces offspring), we will model the number of reproductive pairs (falcons$R.Pairs) as a function of the total number of pairs (falcons$Pairs).

Because the total number of reproductive pairs cannot exceed the total number of pairs, the counts in falcons$.RPairs are bounded to be less than falcons$Pairs. In this case, the Poisson distribution is not an appropriate count model. Instead, we will use the binomial distribution:

\[C_t \sim binomial(N_t, p_t)\]

Our goal is to model \(p_t\), the probability of nesting successfully in each year. In this case, the log link is not appropriate - \(p_t\) is bound between 0 and 1. For probabilities, the logit link is generally the appropriate link function:

\[logit(p_t) = log\bigg(\frac{p_t}{1-p_t}\bigg)\]

Following Kéry & Schaub, we’ll model probability as a quadratic function of year:

\[logit(p_t) = \alpha + \beta_1 \times year_t + \beta_2 \times year^2_t\]

As in the last example, there is a JAGS model file available in the WILD6900 package:

mod.file <- system.file("jags/GLM_Binomial.jags", package = "WILD6900")However, before looking at it, see if you can write out the model in the BUGS language (use the same priors from the previous example and note that there is a built in logit() function).

As before, we prepare the data and run the model:

year <- (falcons$Year - mean(falcons$Year))/sd(falcons$Year)

jags_data <- list(C = falcons$R.Pairs, N = falcons$Pairs, year = year, nyears = nrow(falcons))

jags_inits <- function(){list(alpha = rnorm(1), beta1 = rnorm(1), beta2 = rnorm(1), beta3 = rnorm(1))}

params <- c("alpha", "beta1", "beta2", "p")

nC <- 3

nI <- 10000

nB <- 2500

nT <- 1

falcon_mod <- jagsUI::jags(data = jags_data, inits = jags_inits,

parameters.to.save = params,

model.file = mod.file,

n.chains = nC, n.iter = nI,

n.burnin = nB, n.thin = nT)

#>

#> Processing function input.......

#>

#> Done.

#>

#> Compiling model graph

#> Resolving undeclared variables

#> Allocating nodes

#> Graph information:

#> Observed stochastic nodes: 40

#> Unobserved stochastic nodes: 3

#> Total graph size: 307

#> Warning in jags.model(file = model.file, data = data, inits = inits, n.chains =

#> n.chains, : Unused initial value for "beta3" in chain 1

#> Warning in jags.model(file = model.file, data = data, inits = inits, n.chains =

#> n.chains, : Unused initial value for "beta3" in chain 2

#> Warning in jags.model(file = model.file, data = data, inits = inits, n.chains =

#> n.chains, : Unused initial value for "beta3" in chain 3

#> Initializing model

#>

#> Adaptive phase.....

#> Adaptive phase complete

#>

#>

#> Burn-in phase, 2500 iterations x 3 chains

#>

#>

#> Sampling from joint posterior, 7500 iterations x 3 chains

#>

#>

#> Calculating statistics.......

#>

#> Done.

falcon_mod$summary[1:10,]| mean | sd | 2.5% | 25% | 50% | 75% | 97.5% | Rhat | n.eff | overlap0 | f | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| alpha | 0.7814 | 0.0557 | 0.6725 | 0.7439 | 0.7809 | 0.8186 | 0.8931 | 1.000 | 18042 | 0 | 1.0 |

| beta1 | 0.0591 | 0.0455 | -0.0301 | 0.0280 | 0.0593 | 0.0908 | 0.1474 | 1.001 | 2224 | 1 | 0.9 |

| beta2 | -0.3035 | 0.0413 | -0.3839 | -0.3313 | -0.3039 | -0.2752 | -0.2226 | 1.000 | 4660 | 0 | 1.0 |

| p[1] | 0.4599 | 0.0371 | 0.3887 | 0.4342 | 0.4596 | 0.4853 | 0.5335 | 1.001 | 2110 | 0 | 1.0 |

| p[2] | 0.4821 | 0.0345 | 0.4159 | 0.4582 | 0.4817 | 0.5057 | 0.5502 | 1.001 | 2067 | 0 | 1.0 |

| p[3] | 0.5032 | 0.0318 | 0.4421 | 0.4814 | 0.5031 | 0.5250 | 0.5660 | 1.001 | 2031 | 0 | 1.0 |

| p[4] | 0.5233 | 0.0292 | 0.4668 | 0.5033 | 0.5233 | 0.5432 | 0.5807 | 1.001 | 2006 | 0 | 1.0 |

| p[5] | 0.5422 | 0.0268 | 0.4902 | 0.5238 | 0.5422 | 0.5605 | 0.5946 | 1.001 | 1994 | 0 | 1.0 |

| p[6] | 0.5599 | 0.0245 | 0.5120 | 0.5430 | 0.5599 | 0.5766 | 0.6079 | 1.001 | 1998 | 0 | 1.0 |

| p[7] | 0.5764 | 0.0225 | 0.5327 | 0.5610 | 0.5764 | 0.5916 | 0.6203 | 1.001 | 2024 | 0 | 1.0 |

# View traceplots for alpha, beta1, and beta2(not for p)

jagsUI::traceplot(falcon_mod, parameters=params[-4])

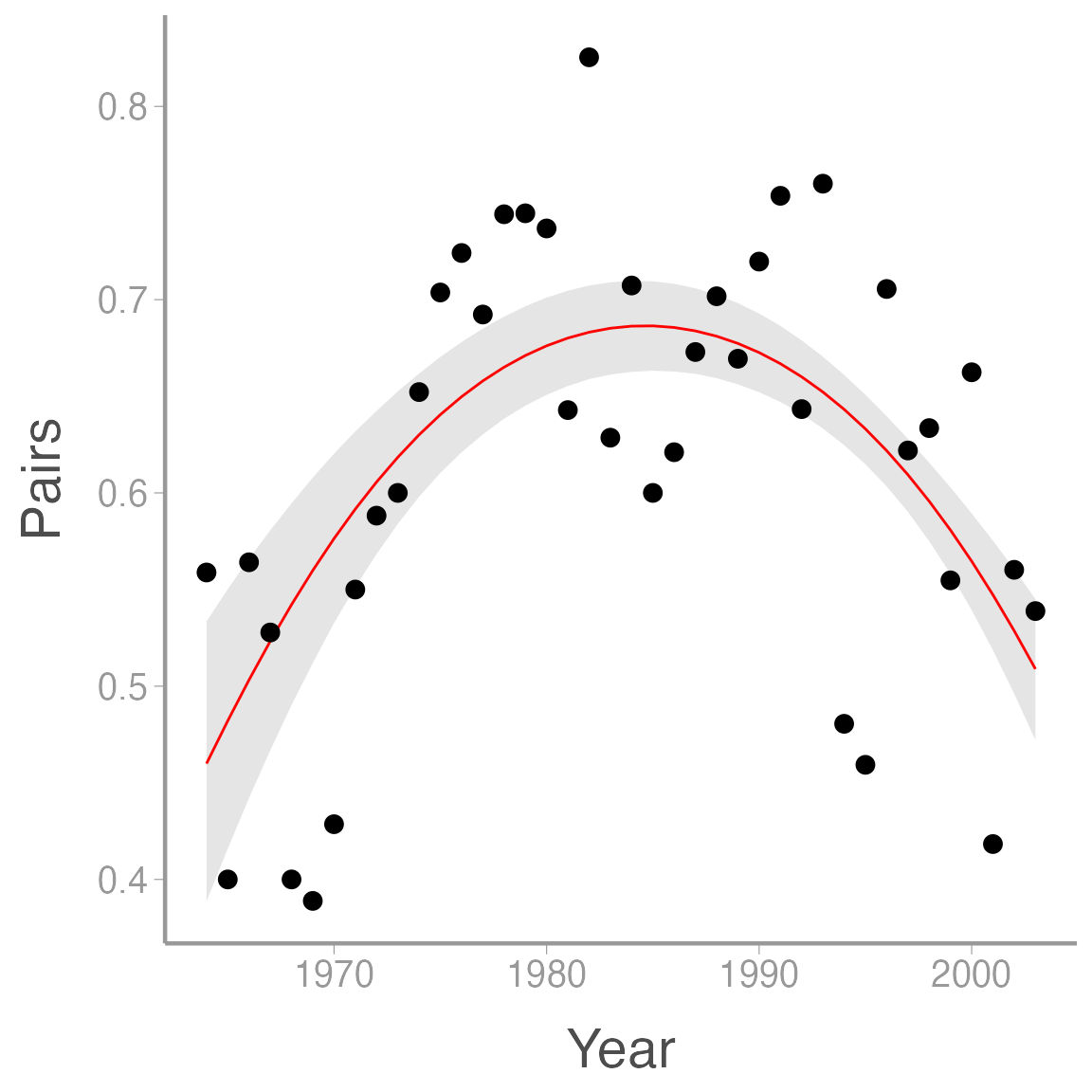

falcons <- dplyr::mutate(falcons, p = falcon_mod$mean$p,

q2.5_p = falcon_mod$q2.5$p,

q97.5_p = falcon_mod$q97.5$p)

ggplot(falcons) +

geom_ribbon(aes(x = Year, ymin = q2.5_p, ymax = q97.5_p), fill = "grey90") +

geom_path(aes(x = Year, y = p), color = "red") +

geom_point(aes(x = Year, y = R.Pairs/Pairs)) +

scale_y_continuous("Pairs")